Artificial Intelligence (AI) has transformed the way we work, live, and interact with technology, particularly in fields like AI transcription. From converting conversations to text with remarkable speed to enabling voice-based search, transcription AI has become an indispensable tool. However, like any technology, AI systems are only as good as the data they are trained on. When poor-quality data is fed into AI models, the result is clear—errors in, errors out.

This blog takes an in-depth look at the significant risks posed by low-quality data in AI development, especially in audio-to-text AI systems. It reveals why maintaining high-quality data is essential for creating accurate and reliable AI transcription services.

The Foundation of AI Development: Data

AI models rely heavily on training data to simulate human decision-making and behavior. Whether for accurately identifying objects in an image or transcribing spoken words into text, a robust dataset forms the backbone of any AI system. For audio-to-text AI solutions, the dataset typically includes thousands of hours of audio recordings alongside their accurately transcribed text.

Now imagine what happens when this data is riddled with errors. Mislabelled samples, background noise, poor audio quality, inaccurate transcriptions, or even biases in the dataset can lead to critically flawed AI models. When AI is tasked with transcribing audio to text, any gap in data quality manifests as errors in the output, which might range from contextual inaccuracies to complete transcription failures.

The Risks of Poor-Quality Data in AI Transcription

1. Accuracy Issues

AI transcription thrives on data accuracy. For example, when you use an AI to transcribe audio, the slightest inconsistencies in training data can lead to major errors in the output text. Misinterpreted accents, omitted words, or incorrect phrasing due to noisy audio data compromise the reliability of transcription services.

2. Lack of Adaptability

High-quality AI transcription services depend on the system’s ability to understand diverse accents, dialects, and linguistic nuances. Poor-quality data, such as one-dimensional datasets that do not represent diverse speakers or situations, hinders the adaptability of AI models. This phenomenon often leads to biased or incomplete transcriptions and limits broader usability.

3. Propagation of Errors

Errors in AI don't just stop at inaccurate transcription; they're cyclical. For instance, if an audio-to-text AI model is trained on flawed transcriptions, every subsequent use-case will propagate these inaccuracies. This creates a domino effect, affecting everything from customer service applications to legal documentation and closed-captioning services.

4. Enterprise and Business Pitfalls

For organizations that rely on AI transcription to transcribe audio to text ai—whether it's for meeting notes, marketing insights, or customer interaction data—AI inaccuracies can have cascading consequences. Misreported data can skew analytics, lead to poor decision-making, or even result in financial and reputational risks.

5. Regulatory and Legal Risks

Legal and regulatory frameworks demand high levels of accuracy, particularly in industries like healthcare, finance, and legal transcription. If AI audio transcription tools generate faulty results due to low-quality training data, businesses might face compliance issues, legal disputes, or even penalties.

Case in Point: AI Audio Transcription Challenges

Consider a customer service AI that transcribes customer calls to text for further analysis. If the AI has been trained on error-filled datasets, it might misinterpret critical keywords or customer sentiments. For instance, a customer saying, "I’m unhappy with the service" might be wrongly transcribed as "I’m happy with the service." The impact? Companies might lose trust with customers, leading to poor service scores.

Another example can be seen in courtrooms where audio recordings are converted into text. Any misstep, such as incorrect speaker identification or missed legal jargon, could result in misinterpretation of facts and weaken a legal case.

Ensuring High-Quality Data in AI Transcription Development

For high-performing AI transcription systems, data quality control is non-negotiable. Here’s how developers can manage the risks:

1. Thorough Data Vetting

Before training, every piece of audio data and transcription should undergo rigorous checks for errors, biases, and inconsistencies.

2. Enhanced Diversity in Data Sets

AI audio transcription systems should be trained on a variety of accents, languages, and speaking styles to boost adaptability and broaden effectiveness.

3. Encoding Context

Contextual understanding is critical for AI to transcribe audio accurately. Training datasets should include multiple scenarios, such as one-on-one conversations, group discussions, and monologues.

4. Regular Validation and Updates

Post-launch, AI transcription models must undergo continuous testing to keep up with new challenges, such as evolving language patterns or industry-specific terminology.

Why Choose a Professional AI Transcription Service?

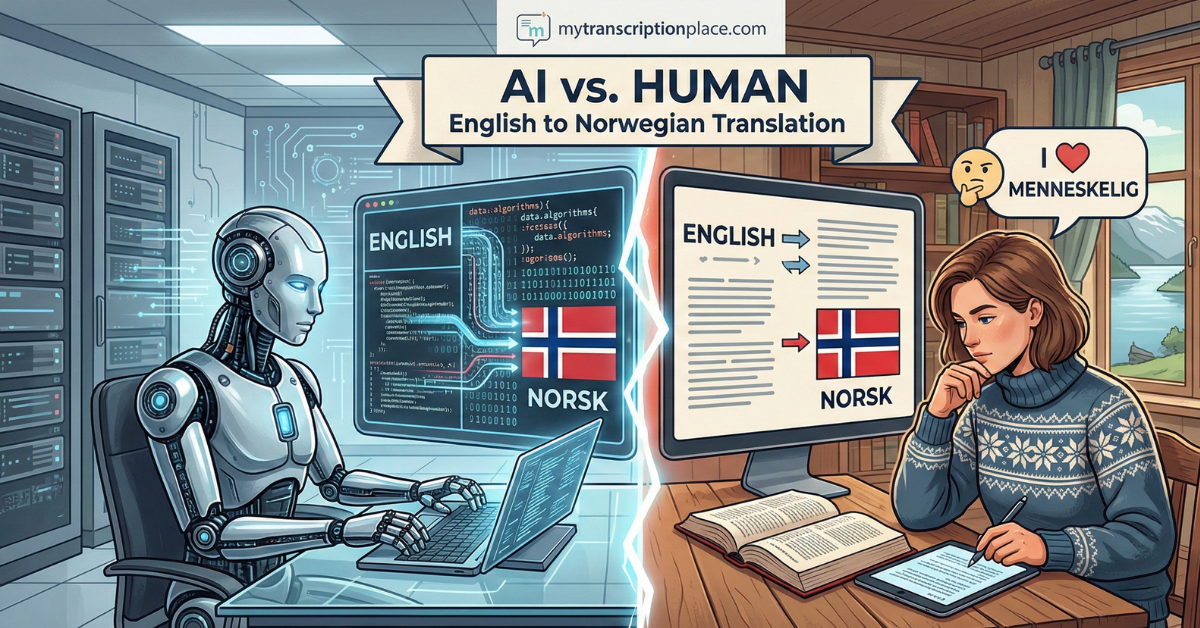

While strides in AI have made it easier to transcribe audio, it’s important to recognize that poorly implemented systems can cause more harm than good. This is where professional AI transcription services come into play. Providers like myTranscriptionPlace ensure their AI systems are built with the highest-grade, error-free datasets for optimal performance. From handling complex audio files to delivering precise text transcriptions, such services provide unmatched reliability and convenience across industries.

Conclusion

The maxim "errors in, errors out" underlines how critical data quality is in shaping successful AI solutions. For AI transcription services, reliable data not only ensures transcription accuracy but also safeguards businesses from financial, legal, and operational risks. Investing in high-quality data and partnering with trustworthy transcription service providers are thus essential for navigating the increasingly data-driven world.

If you’re searching for accurate, efficient, and dependable AI transcription services, look no further than myTranscriptionPlace. With cutting-edge technology and a relentless commitment to quality, myTranscriptionPlace empowers individuals and businesses by delivering seamless audio-to-text AI solutions tailored to your needs.

FAQs

1. What is poor-quality data in AI development, and why is it a problem?

Poor-quality data refers to datasets that are incomplete, inaccurate, inconsistent, biased, or noisy. It’s a problem because AI systems rely heavily on data to learn and make predictions. Flawed data leads to unreliable models, resulting in errors, inefficiencies, and poor outcomes.

2. How does poor-quality data affect the accuracy of AI predictions?

Poor-quality data introduces inconsistencies and errors into the training process, causing AI models to misinterpret patterns or miss critical information. This reduces the accuracy of AI predictions, making outputs less reliable and prone to mistakes.

3. What are the risks of training AI models on poor-quality data?

Training AI on poor-quality data can lead to biased results, errors in decision-making, limited adaptability, and the propagation of inaccuracies across systems. These issues can cause legal and compliance risks, reputational damage, and operational inefficiencies.

4. How can poor-quality data impact business decisions and performance?

Poor-quality data can skew analytics, leading to flawed insights and decisions. For businesses, this can result in inaccurate forecasting, missed opportunities, financial losses, and reduced customer satisfaction, ultimately weakening overall performance.